template<typename BlockType = FirstFitBlock<uintptr_t>>

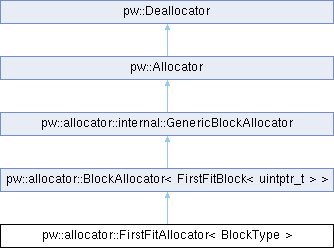

class pw::allocator::FirstFitAllocator< BlockType >

Block allocator that uses a "first-fit" allocation strategy split between large and small allocations.

In this strategy, the allocator handles an allocation request by starting at the beginning of the range of blocks and looking for the first one which can satisfy the request.

Optionally, callers may set a "threshold" value. If set, requests smaller than the threshold are satisfied using the last compatible block. This separates large and small requests and can reduce overall fragmentation.

|

|

constexpr | FirstFitAllocator ()=default |

| | Constexpr constructor. Callers must explicitly call Init.

|

| |

| | FirstFitAllocator (ByteSpan region) |

| |

| | FirstFitAllocator (ByteSpan region, size_t threshold) |

| |

|

void | set_threshold (size_t threshold) |

| | Sets the threshold value for which requests are considered "large".

|

| |

|

Range | blocks () const |

| | Returns a Range of blocks tracking the memory of this allocator.

|

| |

| void | Init (ByteSpan region) |

| |

| size_t | GetMaxAllocatable () |

| |

|

Fragmentation | MeasureFragmentation () const |

| | Returns fragmentation information for the block allocator's memory region.

|

| |

|

| GenericBlockAllocator (const GenericBlockAllocator &)=delete |

| |

|

GenericBlockAllocator & | operator= (const GenericBlockAllocator &)=delete |

| |

|

| GenericBlockAllocator (GenericBlockAllocator &&)=delete |

| |

|

GenericBlockAllocator & | operator= (GenericBlockAllocator &&)=delete |

| |

| void * | Allocate (Layout layout) |

| |

| template<typename T , int &... kExplicitGuard, typename... Args> |

| std::enable_if_t<!std::is_array_v< T >, T * > | New (Args &&... args) |

| |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_bounded_array_v< T >, int > = 0> |

| ElementType * | New () |

| |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| ElementType * | New (size_t count) |

| |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| ElementType * | New (size_t count, size_t alignment) |

| | Constructs an alignment-byte aligned array of count objects.

|

| |

| template<typename T > |

| T * | NewArray (size_t count) |

| |

| template<typename T > |

| T * | NewArray (size_t count, size_t alignment) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0, typename... Args> |

| UniquePtr< T > | MakeUnique (Args &&... args) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| UniquePtr< T > | MakeUnique (size_t size) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| UniquePtr< T > | MakeUnique (size_t size, size_t alignment) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_bounded_array_v< T >, int > = 0> |

| UniquePtr< T > | MakeUnique () |

| |

| template<typename T > |

| UniquePtr< T[]> | MakeUniqueArray (size_t size) |

| |

| template<typename T > |

| UniquePtr< T[]> | MakeUniqueArray (size_t size, size_t alignment) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0, typename... Args> |

| SharedPtr< T > | MakeShared (Args &&... args) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| SharedPtr< T > | MakeShared (size_t size) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| SharedPtr< T > | MakeShared (size_t size, size_t alignment) |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_bounded_array_v< T >, int > = 0> |

| SharedPtr< T > | MakeShared () |

| |

| bool | Resize (void *ptr, size_t new_size) |

| |

| bool | Resize (void *ptr, Layout layout, size_t new_size) |

| |

| void * | Reallocate (void *ptr, Layout new_layout) |

| |

| void * | Reallocate (void *ptr, Layout old_layout, size_t new_size) |

| |

| size_t | GetAllocated () const |

| |

|

constexpr const Capabilities & | capabilities () const |

| |

|

bool | HasCapability (Capability capability) const |

| | Returns whether a given capability is enabled for this object.

|

| |

| void | Deallocate (void *ptr) |

| |

| void | Deallocate (void *ptr, Layout layout) |

| |

| template<typename ElementType > |

| void | DeleteArray (ElementType *ptr, size_t count) |

| |

| StatusWithSize | GetCapacity () const |

| |

| bool | IsEqual (const Deallocator &other) const |

| |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0> |

| void | Delete (T *ptr) |

| |

|

template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_bounded_array_v< T >, int > = 0> |

| void | Delete (ElementType *ptr) |

| |

|

template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| void | Delete (ElementType *ptr, size_t count) |

| |

|

| static constexpr Capabilities | kCapabilities |

| |

|

static constexpr size_t | kPoisonInterval = PW_ALLOCATOR_BLOCK_POISON_INTERVAL |

| |

| void | Init (BlockType *begin) |

| |

| template<typename Ptr > |

| internal::copy_const_ptr_t< Ptr, BlockType * > | FromUsableSpace (Ptr ptr) const |

| |

| virtual void | DeallocateBlock (BlockType *&&block) |

| |

|

constexpr | GenericBlockAllocator (Capabilities capabilities) |

| |

|

constexpr | Allocator ()=default |

| | TODO(b/326509341): Remove when downstream consumers migrate.

|

| |

|

constexpr | Allocator (const Capabilities &capabilities) |

| |

|

constexpr | Deallocator ()=default |

| | TODO(b/326509341): Remove when downstream consumers migrate.

|

| |

|

constexpr | Deallocator (const Capabilities &capabilities) |

| |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> |

| UniquePtr< T > | WrapUnique (ElementType *ptr, size_t size) |

| |

|

template<typename BlockType > |

| static constexpr Capabilities | GetCapabilities () |

| |

| static void | CrashOnAllocated (const void *allocated) |

| |

| static void | CrashOnOutOfRange (const void *freed) |

| |

|

static void | CrashOnDoubleFree (const void *freed) |

| | Crashes with an informational message that a given block was freed twice.

|

| |

| template<typename T > |

| static constexpr bool | is_bounded_array_v |

| |

| template<typename T > |

| static constexpr bool | is_unbounded_array_v |

| |

Public Types inherited from pw::allocator::BlockAllocator< BlockType_ >

Public Types inherited from pw::allocator::BlockAllocator< BlockType_ > Public Types inherited from pw::Deallocator

Public Types inherited from pw::Deallocator Public Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ >

Public Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > Public Member Functions inherited from pw::allocator::internal::GenericBlockAllocator

Public Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Public Member Functions inherited from pw::Allocator

Public Member Functions inherited from pw::Allocator Public Member Functions inherited from pw::Deallocator

Public Member Functions inherited from pw::Deallocator Static Public Attributes inherited from pw::allocator::BlockAllocator< BlockType_ >

Static Public Attributes inherited from pw::allocator::BlockAllocator< BlockType_ > Protected Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ >

Protected Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator

Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Protected Member Functions inherited from pw::Allocator

Protected Member Functions inherited from pw::Allocator Protected Member Functions inherited from pw::Deallocator

Protected Member Functions inherited from pw::Deallocator Static Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator

Static Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Static Protected Attributes inherited from pw::Deallocator

Static Protected Attributes inherited from pw::Deallocator