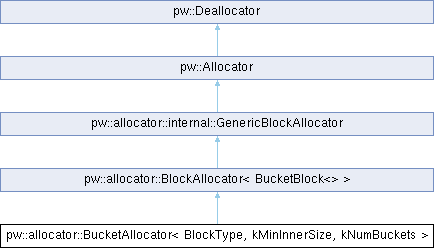

Block allocator that uses sized buckets of free blocks.

In this strategy, the allocator handles an allocation request by starting with the bucket with the smallest size that is larger than the requested size. It tries to allocate using the blocks in that block, if any, before trying the bucket with the next largest size.

On deallocation, blocks are placed in the bucket of the smallest size that is larger than usable space of the block being freed.

The last bucket always has an unbounded size.

As an example, assume that the allocator is configured with a minimum block inner size of 64 and 5 buckets. The internal state may look like the following:

Public Member Functions | |

| constexpr | BucketAllocator () |

Constexpr constructor. Callers must explicitly call Init. | |

| BucketAllocator (ByteSpan region) | |

Public Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > Public Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > | |

| Range | blocks () const |

Returns a Range of blocks tracking the memory of this allocator. | |

| void | Init (ByteSpan region) |

| size_t | GetMaxAllocatable () |

| Fragmentation | MeasureFragmentation () const |

| Returns fragmentation information for the block allocator's memory region. | |

Public Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Public Member Functions inherited from pw::allocator::internal::GenericBlockAllocator | |

| GenericBlockAllocator (const GenericBlockAllocator &)=delete | |

| GenericBlockAllocator & | operator= (const GenericBlockAllocator &)=delete |

| GenericBlockAllocator (GenericBlockAllocator &&)=delete | |

| GenericBlockAllocator & | operator= (GenericBlockAllocator &&)=delete |

Public Member Functions inherited from pw::Allocator Public Member Functions inherited from pw::Allocator | |

| void * | Allocate (Layout layout) |

| template<typename T , int &... kExplicitGuard, typename... Args> | |

| std::enable_if_t<!std::is_array_v< T >, T * > | New (Args &&... args) |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_bounded_array_v< T >, int > = 0> | |

| ElementType * | New () |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| ElementType * | New (size_t count) |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| ElementType * | New (size_t count, size_t alignment) |

Constructs an alignment-byte aligned array of count objects. | |

| template<typename T > | |

| T * | NewArray (size_t count) |

| template<typename T > | |

| T * | NewArray (size_t count, size_t alignment) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0, typename... Args> | |

| UniquePtr< T > | MakeUnique (Args &&... args) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| UniquePtr< T > | MakeUnique (size_t size) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| UniquePtr< T > | MakeUnique (size_t size, size_t alignment) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_bounded_array_v< T >, int > = 0> | |

| UniquePtr< T > | MakeUnique () |

| template<typename T > | |

| UniquePtr< T[]> | MakeUniqueArray (size_t size) |

| template<typename T > | |

| UniquePtr< T[]> | MakeUniqueArray (size_t size, size_t alignment) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0, typename... Args> | |

| SharedPtr< T > | MakeShared (Args &&... args) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| SharedPtr< T > | MakeShared (size_t size) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| SharedPtr< T > | MakeShared (size_t size, size_t alignment) |

| template<typename T , int &... kExplicitGuard, std::enable_if_t< is_bounded_array_v< T >, int > = 0> | |

| SharedPtr< T > | MakeShared () |

| bool | Resize (void *ptr, size_t new_size) |

| bool | Resize (void *ptr, Layout layout, size_t new_size) |

| void * | Reallocate (void *ptr, Layout new_layout) |

| void * | Reallocate (void *ptr, Layout old_layout, size_t new_size) |

| size_t | GetAllocated () const |

Public Member Functions inherited from pw::Deallocator Public Member Functions inherited from pw::Deallocator | |

| constexpr const Capabilities & | capabilities () const |

| bool | HasCapability (Capability capability) const |

| Returns whether a given capability is enabled for this object. | |

| void | Deallocate (void *ptr) |

| void | Deallocate (void *ptr, Layout layout) |

| template<typename ElementType > | |

| void | DeleteArray (ElementType *ptr, size_t count) |

| StatusWithSize | GetCapacity () const |

| bool | IsEqual (const Deallocator &other) const |

| template<typename T , int &... kExplicitGuard, std::enable_if_t<!std::is_array_v< T >, int > = 0> | |

| void | Delete (T *ptr) |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_bounded_array_v< T >, int > = 0> | |

| void | Delete (ElementType *ptr) |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| void | Delete (ElementType *ptr, size_t count) |

Private Member Functions | |

| size_t | DoGetMaxAllocatable () override |

| BlockResult< BlockType > | ChooseBlock (Layout layout) override |

| void | ReserveBlock (BlockType &block) override |

| void | RecycleBlock (BlockType &block) override |

Additional Inherited Members | |

Public Types inherited from pw::allocator::BlockAllocator< BlockType_ > Public Types inherited from pw::allocator::BlockAllocator< BlockType_ > | |

| using | BlockType = BlockType_ |

| using | Range = typename BlockType::Range |

Public Types inherited from pw::Deallocator Public Types inherited from pw::Deallocator | |

| using | Capabilities = allocator::Capabilities |

| using | Capability = allocator::Capability |

| using | Layout = allocator::Layout |

Static Public Attributes inherited from pw::allocator::BlockAllocator< BlockType_ > Static Public Attributes inherited from pw::allocator::BlockAllocator< BlockType_ > | |

| static constexpr Capabilities | kCapabilities |

| static constexpr size_t | kPoisonInterval = PW_ALLOCATOR_BLOCK_POISON_INTERVAL |

Protected Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > Protected Member Functions inherited from pw::allocator::BlockAllocator< BlockType_ > | |

| void | Init (BlockType *begin) |

| template<typename Ptr > | |

| internal::copy_const_ptr_t< Ptr, BlockType * > | FromUsableSpace (Ptr ptr) const |

| virtual void | DeallocateBlock (BlockType *&&block) |

Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator | |

| constexpr | GenericBlockAllocator (Capabilities capabilities) |

Protected Member Functions inherited from pw::Allocator Protected Member Functions inherited from pw::Allocator | |

| constexpr | Allocator ()=default |

| TODO(b/326509341): Remove when downstream consumers migrate. | |

| constexpr | Allocator (const Capabilities &capabilities) |

Protected Member Functions inherited from pw::Deallocator Protected Member Functions inherited from pw::Deallocator | |

| constexpr | Deallocator ()=default |

| TODO(b/326509341): Remove when downstream consumers migrate. | |

| constexpr | Deallocator (const Capabilities &capabilities) |

| template<typename T , int &... kExplicitGuard, typename ElementType = std::remove_extent_t<T>, std::enable_if_t< is_unbounded_array_v< T >, int > = 0> | |

| UniquePtr< T > | WrapUnique (ElementType *ptr, size_t size) |

Static Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator Static Protected Member Functions inherited from pw::allocator::internal::GenericBlockAllocator | |

| template<typename BlockType > | |

| static constexpr Capabilities | GetCapabilities () |

| static void | CrashOnAllocated (const void *allocated) |

| static void | CrashOnOutOfRange (const void *freed) |

| static void | CrashOnDoubleFree (const void *freed) |

| Crashes with an informational message that a given block was freed twice. | |

Static Protected Attributes inherited from pw::Deallocator Static Protected Attributes inherited from pw::Deallocator | |

| template<typename T > | |

| static constexpr bool | is_bounded_array_v |

| template<typename T > | |

| static constexpr bool | is_unbounded_array_v |

|

inlineexplicit |

Non-constexpr constructor that automatically calls Init.

| [in] | region | Region of memory to use when satisfying allocation requests. The region MUST be large enough to fit an aligned block with overhead. It MUST NOT be larger than what is addressable by OffsetType. |

|

inlineoverrideprivatevirtual |

Selects a free block to allocate from.

This method represents the allocator-specific strategy of choosing which block should be used to satisfy allocation requests. If the returned result indicates success, block will be replaced by the chosen block.

| block | Used to return the chosen block. |

| layout | Same as Allocator::Allocate. |

Implements pw::allocator::BlockAllocator< BlockType_ >.

|

inlineoverrideprivatevirtual |

Returns the largest single allocation that can succeed, given the current state of the allocator.

The largest allocation possible at any given time is the inner size of the the largest free block. This method may be expensive to call if the block allocator implementation does not track its largest block. As a result, it should primarily be used for diagnostic purposes after an allocation failure, e.g.

Note that this method does not consider alignment. An allocation with a large alignment requirement may fail even when a large enough block is available if that block cannot satisfy the alignment requirement.

Implements pw::allocator::BlockAllocator< BlockType_ >.

|

inlineoverrideprivatevirtual |

Indicates that a block is now free.

Does nothing by default. Derived class may overload to do additional bookkeeeping.

| block | The block being freed. |

Reimplemented from pw::allocator::BlockAllocator< BlockType_ >.

|

inlineoverrideprivatevirtual |

Indicates that a block will no longer be free.

Does nothing by default. Derived class may overload to do additional bookkeeeping.

| block | The block being freed. |

Reimplemented from pw::allocator::BlockAllocator< BlockType_ >.